Why did I do that?

A big part of juggling the data of multiple companies on a centralized platform is to adhere to multiple data protection laws. The overall process that goes on with the incoming data is probably too long for an intro section, but one important step of it is the data encryption.

Currently, it happens as a Python script over a Pandas dataframe.

import six

from Cryptodome.Cipher import AES

import multiprocessing as mp

import base64

aes_key = b"an example very very secret key."

def encrypt_AES(text, key=aes_key):

cipher = AES.new(key, AES.MODE_SIV)

if isinstance(text, six.string_types):

if len(text) > 64:

text = text[-64:]

out, tag = cipher.encrypt_and_digest(text.encode('utf-8'))

return base64.b64encode(out).decode("utf-8") + base64.b64encode(tag).decode("utf-8")

else:

return text

pandas_df['name'] = pandas_df['name'].apply(lambda x: encrypt_AES(x, aes_key))Well, not exactly like that, but you get the idea: have an encryption function from an established Python package and apply it to a column(-s) of Pandas dataframe.

Don’t change what’s working

It does the trick, so why would I want to change anything about it? Pandas is just not built for even moderate data sizes. The apply method has to iterate over each value in a series to apply the function. If you have multiple columns you want to encrypt, you will have to iterate multiple times. Due to the nature of the encryption function, no vectorization optimizations are available to you. At least, not to my knowledge, but you’re welcome to prove me wrong. Simply put, the process is slow.

And since I’m running this exact type of operation over hundreds of datasets daily, this inefficiency starts to add up.

Pandas is not the tool

Alright, I see you, data analysts and scientist, starting to raise up your pitchforks! Honestly, I love it, it’s the backbone of like 90% of data science out there, before LLM hype took over.

And yet, it has issues. It is hungry for RAM, it is a mess of data types. Ever had to deal with NaNs in an integer field? Yeah…

Polars to the rescue!

I don’t really want to write yet another post about Polars supremacy. One should hope that this is common knowledge by now. Rust-based tool written from the ground up with the specific goal to be better and faster than Pandas. So many cool features, so much speed!

But today we’re interested in a relatively new development: the ability to extend the core functionality of Polars by writing plugins in Rust. The idea is pretty simple - you write whatever you want in Rust, and then you can magically call that same function in Python code as a method of Polars.

Btw, if you haven’t had the chance to try Rust out, I would highly recommend it! I don’t have a CS background, so this pretty hardcore language did not come easy for me. Matter of fact, I’m about as confident in my knowledge of Rust, as I am in becoming an undisputed lightweight champion of the world in UFC - as in, not very much.

Thanks to Marco Gorelli, who spend his personal time to come up with this amazing guide on writing plugins, everybody can build something on their own and share it with the world.

Building an encryption plugin

I was already on the Polars train, but now I need to add the most important part to make it feasible. Because if you’re about to change something in your production pipelines, you best be sure that you’re doing it for the right reasons and with the right justifications. Which means I can’t just take the very same Python function and force-feed it to Polars, that would give me pretty much the same performance, but with a new component. So, why even do that at all?

Which is why I took this existing crate of an encryption algorithm to use in my plugin. Parts of it have already been audited for security, and it boosts the performance and security advantages over other implementations. To be completely honest with you, I understand almost nothing of cryptography, so I’m not going to pretend exactly how it works.

Combining that with what Marco has taught me was not exactly easy, but at the same time, better SWE could’ve easily got it done in a day.

And yet, I made it - look, it even has its own PyPi page!

#[polars_expr(output_type=String)]

fn encrypt(inputs: &[Series], kwargs: KeyKwargs) -> PolarsResult<Series> {

let s = &inputs[0];

let ca = s.str()?;

let cipher = Aes256GcmSiv::new_from_slice(&kwargs.key).expect("key length should be correct");

let nonce = Nonce::from_slice(&kwargs.nonce);

let encrypted_values = ca.apply_to_buffer(|value: &str, output: &mut String| {

let encrypted_data = cipher

.encrypt(nonce, value.as_bytes())

.expect("encryption should not fail");

let encoded_data = base64::encode(encrypted_data);

write!(output, "{}", encoded_data).unwrap();

});

Ok(encrypted_values.into_series())

}A lot to unpack here, if you haven’t had some experience with Rust. The fun part about that is the fact that we’re creating a string object and constantly re-write it. And, for some reason, it is much faster than to just create a new object every time. One day I will be smart enough to understand why, but not today.

Essentially, this Rust code takes a Polars series, does some magic on it and returns a Polars series. And since it is Rust, the transformations utilize multiple threads and don’t have to deal with that pesky Python GIL.

This is the implementation in my GitHub, if you want to take a peak.

Benchmarking

Naturally, whatever you did, you have to measure. Let’s do one together! But before we do that, I have to come clean. See, I don’t use the exact same algorithms - in Python, I use SIV, but in Rust I go for GSM SIV. Almost the same, but different still. And there is no nonce in Python version, but there is one in Rust. Nonce stands for “number used once”, by the way.

First off, we will need an environment that has both the Python and the Rust implementation:

import pandas as pd

import random

from datetime import datetime, timedelta

from polars_encryption import encrypt, decrypt

import time

import polars as pl

import six

from Cryptodome.Cipher import AES

import multiprocessing as mp

import base64

import matplotlib.pyplot as plt

import numpy as npNext, we’ll need some data to test encryption with. No specific rules here, but I wanted to have multiple columns, with at least two of those being fit to encryption.

def generate_data(num_rows):

"""

Generates a list of dictionaries containing random data for specified columns.

Args:

num_rows: The number of rows to generate.

Returns:

A list of dictionaries, where each dictionary represents a data row.

"""

data = []

for _ in range(num_rows):

# Generate random date within the last year

date = datetime.now() - timedelta(days=random.randint(0, 365))

# Generate random integer id (may repeat)

id = random.randint(1, 1000)

# Generate random amount between 1 and 100000

amount = random.uniform(1, 100000)

# Generate random name with 5-10 characters

name_length = random.randint(5, 10)

name = ''.join(random.choice('abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ') for i in range(name_length))

surname_length = random.randint(3, 14)

surname = ''.join(random.choice('abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ') for i in range(name_length))

data.append({

"date": date.strftime("%Y-%m-%d"), # Format date as YYYY-MM-DD

"id": id,

"amount": amount,

"name": name,

"surname": surname

})

return data

# We will do this multiple times, but by hand, to avoid accidental OOM

# # Generate 1,000,000 rows of data

# data = generate_data(1000000)

# # Generate 10,000,000 rows of data

# data = generate_data(10000000)

# Generate 100,000,000 rows of data

data = generate_data(100000000)

print("Data generated!")We will generate 1, 10 and 100 millions rows of data. Yes, I know that you can encapsulate all of that into one function, so that you can run it once and have benchmark data. I was lazy, and OOM is a bitch.

Naive vs. multiprocess

When we started doing data encryption, we had a naive implementation - the one I showed in the beginning. But nowadays, we employ multiprocess library to utilize all the threads available. So we need to test both ways.

I’ve already showed the naive way, now let me show you the multiprocess way:

cpu_cnt = mp.cpu_count()

print(cpu_cnt) # 14 CPUs, baby!

p = mp.Pool(cpu_cnt)

for col_name in ['name', 'surname']:

pandas_df[col_name] = p.map(encrypt_AES, pandas_df[col_name])

p.close()

A gentle reminder: use async for IO bound tasks, use multithread or multiprocess to supercharge CPU bound tasks.

Polars

Alright, now for the star of the show! It is surprisingly easy and eloquent to do anything with Polars, I truly enjoy the syntax. Take a look:

# Define the encryption key and nonce

key = b"an example very very secret key."

nonce = b"unique nonce" # 12 bytes (96 bits)

# Encrypt the plaintext column

polars_df = polars_df.with_columns([

encrypt(pl.col("name"), key=key, nonce=nonce),

encrypt(pl.col("surname"), key=key, nonce=nonce)])Do you see how easy it is?! Looks nice too, you call “with_columns” without specifying aliases, making so that the columns are re-written, you select what columns you select by pl.col and feed those into the encrypt function. And that’s it!

Results

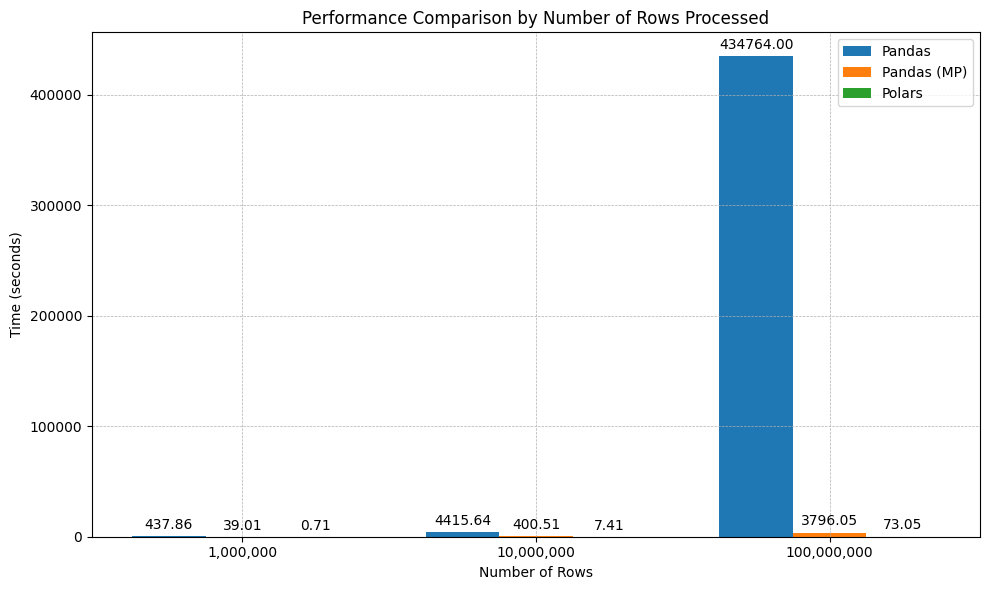

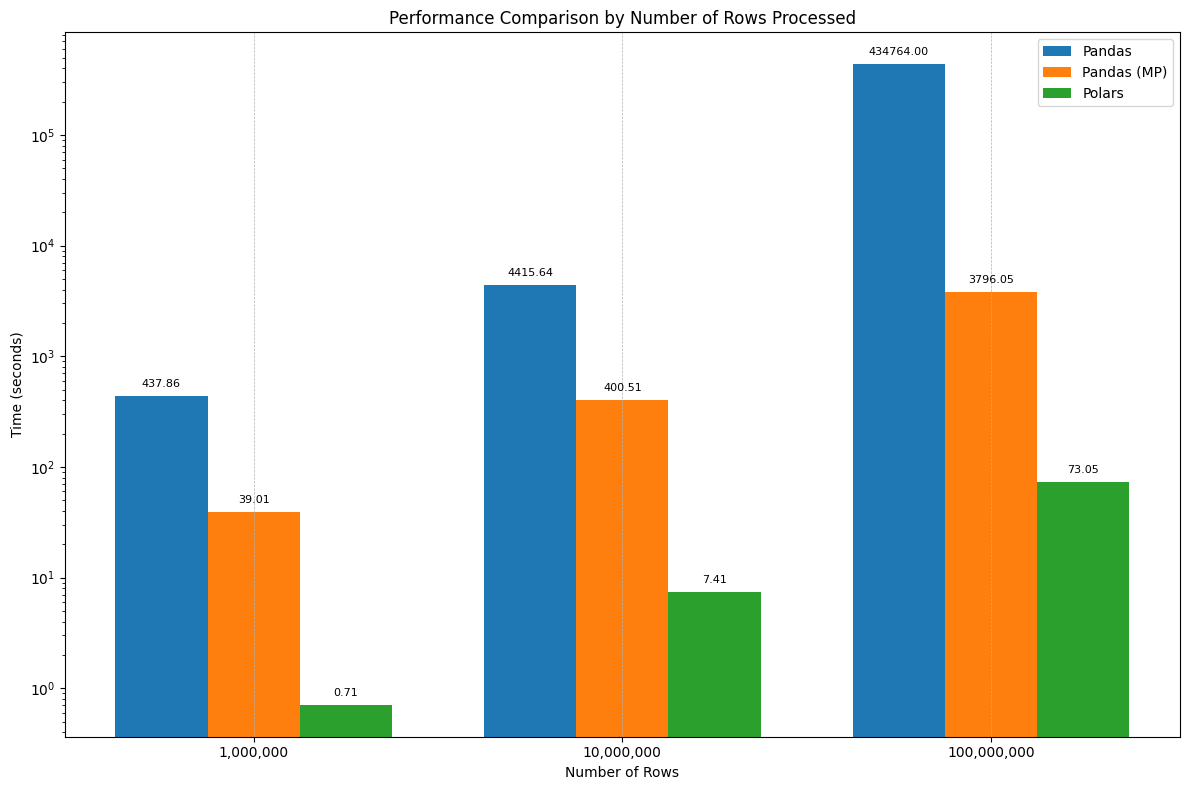

All what’s left is to time the execution of each code block - and that gives us time of execution and number of rows encryption code worked over. Let’s compile that into a chart.

Unsurprisingly, Polars won by a landslide.

I couldn’t really see much, so here is the version with Y-axis in logarithmic scale:

As you can see, Polars is like 500-600x times faster than naive implementation and 50x faster than multiprocess implementation.

Conclusion

With the recent raise of DuckDB and Polars it is obvious that the world has caught up: you don’t need to spin up an expensive Spark cluster every time you want to chew through a measly few millions of rows. If you are on the cloud, serverless is the way to go for these types of workloads. And if you’re on premise, you can save up on infrastructure complexity by going with Polars instead of Spark.

But even if you do have a real need for Spark, you can at least offload smaller tasks from your cluster to give it some breathing room.

And, trust me, writing plugins is much easier than you think. Even I managed to do one, and I don’t even know Rust!